Author: Vaishnavi Shivde

What is Kubernetes?

Kubernetes, often abbreviated as K8s where ‘8’ represents the number of letters between ‘K’ and ‘s’.

it is a container orchestration platform that simplifies the deployment, scaling, and management of containerized applications.

Benefits of Using Kubernetes:

- Scalability: Kubernetes enables effortless scaling of applications, allowing them to adapt dynamically to varying workloads.

- Resilience: With built-in self-healing capabilities, Kubernetes ensures that applications remain available and operational, even in the face of failures.

- Portability: Kubernetes provides a consistent platform for deploying and managing applications across diverse environments, from on-premises data centers to cloud infrastructure.

- Resource Efficiency: By optimizing resource utilization and scheduling containers based on demand, Kubernetes minimizes wastage and maximizes efficiency.

- Automation: Kubernetes automates repetitive tasks such as deployment, scaling, and updates, freeing up valuable time for developers and operators.

Architecture of Kubernetes? What is Control Plane?

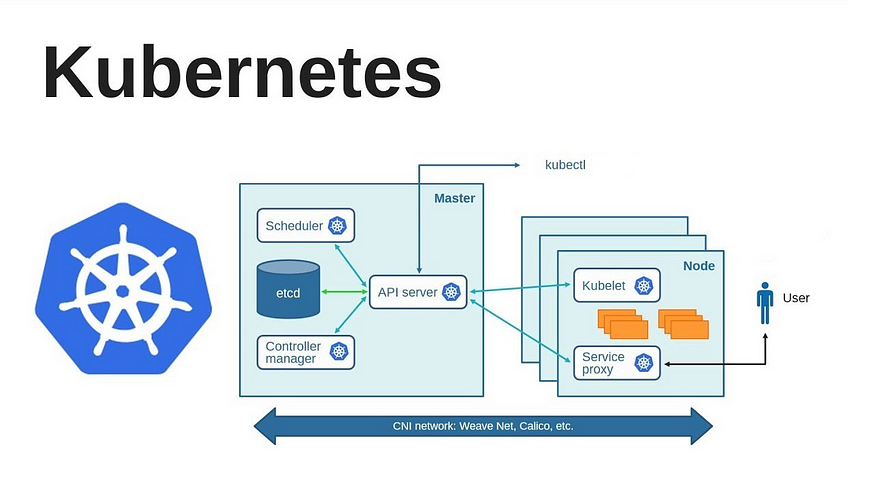

Kubernetes follows a client-server architecture that consists of two main components: the control plane and the data plane. The control plane is responsible for managing the state and configuration of the cluster, while the data plane is responsible for running the workloads and services on the cluster.

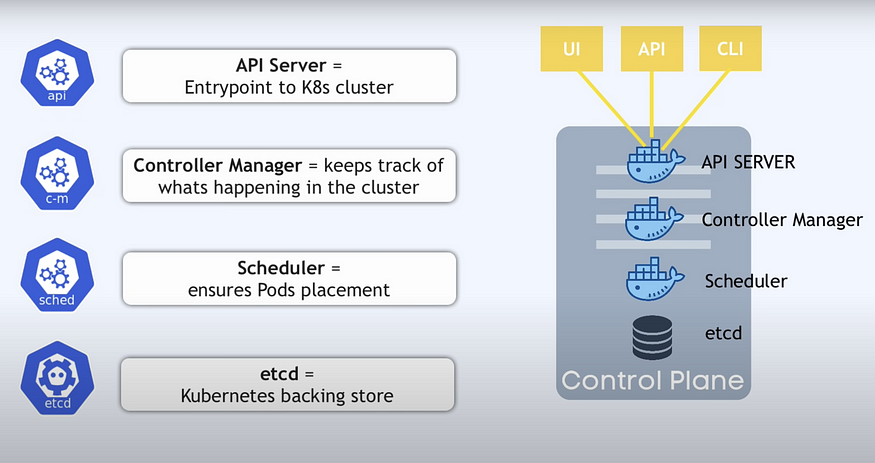

Control Plane Components

The control plane’s components make global decisions about the cluster (for example, scheduling), as well as detecting and responding to cluster events (for example, starting up a new pod when a Deployment’s replicas field is unsatisfied).

kube-apiserver

The API server is a component of the Kubernetes control plane that exposes the Kubernetes API. The API server is the front end for the Kubernetes control plane.

The main implementation of a Kubernetes API server is kube-apiserver. kube-apiserver is designed to scale horizontally—that is, it scales by deploying more instances. You can run several instances of kube-apiserver and balance traffic between those instances.

etcd

Consistent and highly-available key value store used as Kubernetes’ backing store for all cluster data.

kube-scheduler

Control plane component that watches for newly created Pods with no assigned node, and selects a node for them to run on.

Factors taken into account for scheduling decisions include: individual and collective resource requirements, hardware/software/policy constraints, affinity and anti-affinity specifications, data locality, inter-workload interference, and deadlines.

kube-controller-manager

Control plane component that runs controller processes.

Logically, each controller is a separate process, but to reduce complexity, they are all compiled into a single binary and run in a single process.

There are many different types of controllers. Some examples of them are:

Node controller: Responsible for noticing and responding when nodes go down.

Job controller: Watches for Job objects that represent one-off tasks, then creates Pods to run those tasks to completion.

EndpointSlice controller: Populates EndpointSlice objects (to provide a link between Services and Pods).

ServiceAccount controller: Create default ServiceAccounts for new namespaces.

Node Components

Node components run on every node, maintaining running pods and providing the Kubernetes runtime environment.

kubelet

An agent that runs on each node in the cluster. It makes sure that containers are running in a Pod.

The kubelet takes a set of PodSpecs that are provided through various mechanisms and ensures that the containers described in those PodSpecs are running and healthy. The kubelet doesn’t manage containers which were not created by Kubernetes.

kube-proxy

kube-proxy is a network proxy that runs on each node in your cluster, implementing part of the Kubernetes Service concept.

kube-proxy maintains network rules on nodes. These network rules allow network communication to your Pods from network sessions inside or outside of your cluster.

kube-proxy uses the operating system packet filtering layer if there is one and it’s available. Otherwise, kube-proxy forwards the traffic itself.

Container runtime

A fundamental component that empowers Kubernetes to run containers effectively. It is responsible for managing the execution and lifecycle of containers within the Kubernetes environment.

Kubernetes supports container runtimes such as containerd, CRI-O, and any other implementation of the Kubernetes CRI (Container Runtime Interface).

Write the difference between kubectl and kubelets.

kubectl: This command-line interface acts as the primary means of interacting with the Kubernetes cluster, allowing users to deploy, manage, and troubleshoot applications with ease.

Kubelet: As the node agent, kubelet is responsible for managing the lifecycle of pods on a node, ensuring they run as intended and reporting back to the Control Plane.

Explain the role of the API server.

The API server in Kubernetes plays a central role in the operation of the Kubernetes cluster. Here’s a concise overview of its functions:

- Front End to the Control Plane: The API server acts as the front end for the Kubernetes control plane. It serves as the main management point that processes REST requests, validates them, and executes the business logic.

- Resource Management: It handles CRUD (Create, Read, Update, Delete) operations for Kubernetes resources such as pods, services, replication controllers, and others.

- Authentication and Authorization: The API server is responsible for verifying the identity of users, nodes, and controllers, and ensuring that they have authorization to access specific resources.

- API Gateway: It exposes Kubernetes API and handles external and internal requests. It acts as a gateway through which all internal components (like the scheduler, kubelet, controller manager) and external user commands interact with the Kubernetes cluster.

- Data Store Interface: The API server interacts with the etcd store to retrieve the state and configurations of all cluster components and resources, ensuring data consistency and durability.

- Scalability and Availability: The API server is designed to scale horizontally. This means you can run multiple instances of the API server to distribute traffic and improve the reliability and availability of the cluster’s control plane.

Author: Vaishnavi Shivde